Gabriel Aguiar Noury

on 17 May 2021

The State of Robotics – April 2021

Together we have reached the end. Two partners, two allies, two distributions that supported millions of innovators have reached their end-of-life (EOL). April will be remembered as the month where ROS Kinetic and Ubuntu Xenial reached EOL. ROS Kinetic is one of the most used, widely deployed and extensively contributed ROS distributions (1st with 1233 repos in ros/rosdistro). Released in 2016, it supported newer related components, notably Gazebo 7 and OpenCV 3, and this month has reached its end.

But the end has also brought opportunities, and today we will chat about them.

ROS migration

With ROS Kinetic and Ubuntu Xenial reaching EOL, you will stop receiving security updates – for this reason, we do not recommend staying in an unsupported distribution of Ubuntu or ROS.

We encourage you to explore migration options to keep your robot compliant with security maintenance frameworks.

Migration leads to new opportunities, such as moving to ROS 2 and starting to learn about DDS. Some people might find this troublesome, but it shouldn’t be. Learning about DDS will definitely help you, as more technologies are using it for obtaining reliable pub/sub frameworks. From microcontrollers to drones and autonomous cars. Migration opens the door to new knowledge and with it new fields.

To learn more about the implications of EOL join us in our next webinar about ROS Kinetic EOL. Together with Sid Faber, our security expert and head of the robotics team at Canonical, we will explore the implications of EOL and security.

Flying in mars, from Wright to Ingenuity

Yes, we’re still talking about this – can you blame us? It’s simply a masterpiece of engineering. In April humanity’s first spatial drone made its first flight on mars. Ingenuity autonomously rose to a height of 5m before speeding off laterally for 50m – half the length of a football field at over 2,500 revolutions per minute. Ingenuity then came back to its take-off spot, for a total flight time of 80 seconds.

It has completed 3 flights in total, in our pursuit to explore the flight dynamics in Mars. Getting airborne on the planet is not easy. The atmosphere is thin, just 1% of our density on Earth. This gives the blades on a rotorcraft very little to bite into to gain lift.

“We’ve gone from ‘theory says you can’ to really now having done it. It’s a major first for the human race” said MiMi Aung, project manager for Ingenuity at Nasa’s Jet Propulsion Laboratory (JPL) in Pasadena, California.

We have to look back at this picture, where everything started, and remember that with perseverance and ingenuity we can accomplish anything.

Automation is just starting

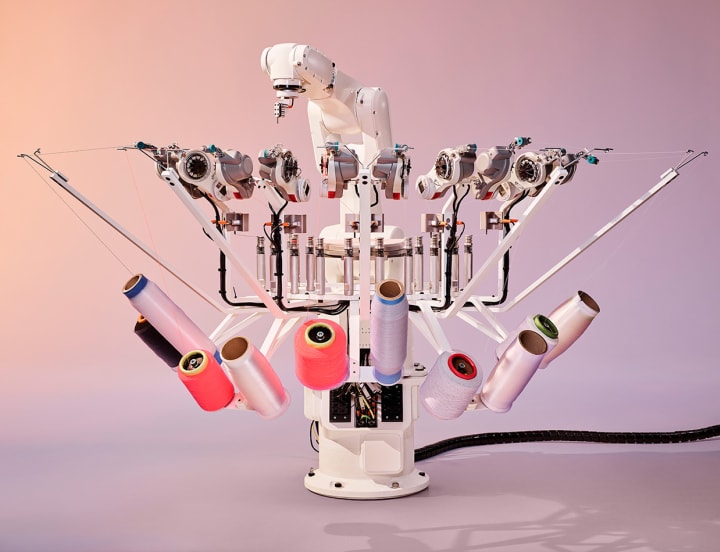

Just when you thought that robotics arm applications couldn’t surprise you anymore, Adidas opened the door to more automation. Robotic arms can be used in pick and place functions, assembly operations, arc welding, handling machine tools and more. We have adopted them to accelerate production, and today they are doing incredible things for footwear.

The STRUNG robot is an industry-first textile and creation process that allows Adidas to input athlete data into the precision placement of each thread to make the perfect shoe for athletes. The robot places each thread into a single composite with specific performance zones and properties. The result is a lightweight upper that’s precisely fitted for support, flex, and breathability—all within one piece of material.

Beginning with a 3D-printed sole, STRUNG can place some 2,000 threads from up to 10 different sneaker yarns in one upper section of the shoe. Adidas says it can make a single upper in 45 minutes and a pair of sneakers in 1 hour and 30 minutes. They plan to reduce this time down to minutes in the months ahead, the company said.

Adidas began working on the Futurecraft. Strung project back in 2016 but now we can finally expect STRUNG shoes on the marketplace later this year or sometime in 2022.

Walking the walk with wearables

We have seen what wearables can do for us. From rehabilitation to supporting those with physical disabilities, wearables have been helping those that suffered from injuries to achieve a more active lifestyle. Yes, most of them are expensive and require big equipment that cannot be used outside medical centres, but this is slowly changing.

Take for instance Ascend a smart knee orthosis designed to improve mobility and relieve knee pain. This customisable, lightweight, and comfortable robot reduces burden on the knee and intuitively adjusts support as needed. Designed for people suffering from osteoarthritis, knee instability, and/or weak quadriceps, Ascend is one of those wearables that can be used in our day to day.

But we are not stopping there. Researchers working at the University of Waterloo in Canada are using artificial intelligence and wearable cameras, now aiming to help robotic exoskeletons walk by themselves.

A big downside of wearables that support large parts of our body is that they rely on manual controls to switch from one mode of locomotion to another. Therefore shifting from standing to walking, or walking on the ground to walking up or downstairs, need to be done manually. This is no longer functional if every time you switch the way you want to move you need to use a joystick or smartphone app.

The ExoNet project, is the first open-source database of high-resolution wearable camera images of human locomotion scenarios. ExoNet contains over 5.6 million RGB images of indoor and outdoor real-world walking environments, which were collected using a lightweight wearable camera system throughout the summer, autumn, and winter seasons. Approximately 923,000 images in ExoNet were human-annotated using a 12-class hierarchical labelling architecture. The Waterloo team used this data to train deep-learning algorithms to recognise different walking environments with 73% accuracy.

Through this, the study aims to eliminate the manual control of wearables by providing systems with the intelligence needed to detect and fit automatically the mode of locomotion needed.

microROS and Raspberry Pi Pico

A combination from heaven. MicroROS allows you to control microcontrollers using the same ROS framework. This enables innovators to easily interconnect microcontrollers with their ROS 2 applications and with these different sensors and actuators. Want to learn more? See our latest for getting started with micro-ROS on the Raspberry Pi Pico.

ROS enterprise support

In April we announced our partnership with the maintainer of ROS, Open Robotics.

With ROS deployed as part of so many commercial products and services, it’s clear that our community needs a way to safely run robots beyond their software EOL.

That’s why both organizations partner in the delivery of ROS ESM, a service that guarantees companies to receive security updates for their ROS and OS when they need them. No more waiting for security patches and CVE fixes. No more chasing package maintainers to get the results you require.

ROS ESM provides a single point of contact for all the software in ESM, including ROS, as opposed to trying to figure out where to log a bug or propose a fix and hoping it might get eyes at some point. Save engineering time and effort by contacting Canonical and Open Robotics for all the support you and your robot deserve. All in one place!

- Check our new website for ROS ESM.

Outro

With the end of two great distributions, we say goodbye to April. But the new opportunities that open ahead are looking amazing! From humanity’s first flight to another planet to our constant search for improving our processes and technologies, we see a great future for robotics. We want to thank everyone who contributed to this amazing ROS distribution, and we look forward to what you can bring next.

As always, we want to keep learning from you! From your innovations, products or research. We’d love to hear about your ROS and or robotics-related project and feature it next month on our blog. Send a summary to

[email protected], and we’ll be in touch.

Thanks for reading.